Canal是阿里巴巴mysql数据库binlog的增量订阅&消费组件,基于日志增量订阅&消费支持的业务:

- 数据库镜像

- 数据库实时备份

- 多级索引 (卖家和买家各自分库索引)

- search build

- 业务cache刷新

- 价格变化等重要业务消息

项目介绍

名称:canal [kə’næl]

译意: 水道/管道/沟渠

语言: 纯java开发

定位: 基于数据库增量日志解析,提供增量数据订阅&消费,目前主要支持了mysql

关键词: mysql binlog parser / real-time / queue&topic

工作原理

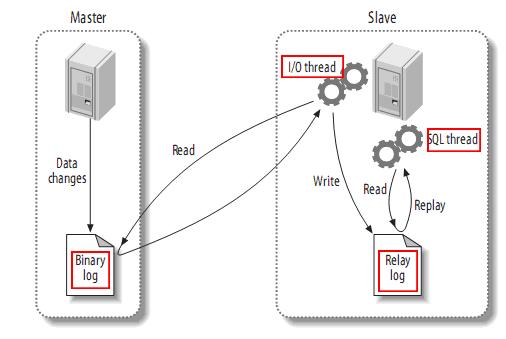

mysql主备复制实现

从上层来看,复制分成三步:

- master将改变记录到二进制日志(binary log)中(这些记录叫做二进制日志事件,binary log events,可以通过show binlog events进行查看);

- slave将master的binary log events拷贝到它的中继日志(relay log);

- slave重做中继日志中的事件,将改变反映它自己的数据。

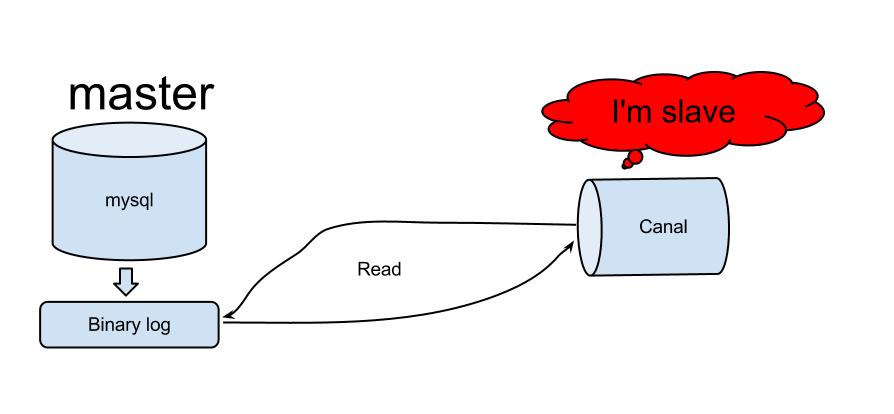

canal的工作原理:

原理相对比较简单:

- canal模拟mysql slave的交互协议,伪装自己为mysql slave,向mysql master发送dump协议

- mysql master收到dump请求,开始推送binary log给slave(也就是canal)

- canal解析binary log对象(原始为byte流)

MySql的安装

操作系统: ubuntu 16.04.2

第一种方式手动编译安装MySQL

MySql获取

创建目录 mkdir -p /usr/local/mysql

cd mysql

wget http://mirrors.sohu.com/mysql/MySQL-5.6/mysql-5.6.33.tar.gz

tar -xvf mysql-5.6.33.tar.gz

cd mysql-5.6.33创建MySql目录

mkdir -p mysql-m mysql-m/etc mysql-m/database-m mysql-m/logcmake编译安装

我的系统上之前没有cmake所以,手动安装cmake: apt-get install cmakecmake \

-DCMAKE_INSTALL_PREFIX:PATH=/usr/local/mysql/mysql-m \

-DMYSQL_DATADIR:PATH=/usr/local/mysql/mysql-m/database-m \

-DSYSCONFDIR:PATH=/usr/local/mysql/mysql-m/etc \

-DDEFAULT_COLLATION=utf8_general_ci \

-DDEFAULT_CHARSET=utf8 \

-DEXTRA_CHARSETS=all \

-DMYSQL_TCP_PORT=3307 \

-DWITH_DEBUG:BOOL=on

make; make install

报错信息如下1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17-- Could NOT find Curses (missing: CURSES_LIBRARY CURSES_INCLUDE_PATH)

CMake Error at cmake/readline.cmake:85 (MESSAGE):

Curses library not found. Please install appropriate package,

remove CMakeCache.txt and rerun cmake.On Debian/Ubuntu, package name is libncurses5-dev, on Redhat and derivates it is ncurses-devel.

Call Stack (most recent call first):

cmake/readline.cmake:128 (FIND_CURSES)

cmake/readline.cmake:202 (MYSQL_USE_BUNDLED_EDITLINE)

CMakeLists.txt:421 (MYSQL_CHECK_EDITLINE)

-- Configuring incomplete, errors occurred!

See also "/usr/local/mysql/mysql-5.6.33/CMakeFiles/CMakeOutput.log".

See also "/usr/local/mysql/mysql-5.6.33/CMakeFiles/CMakeError.log".

安装libncurses5-dev: apt-get install libncurses5-dev

安装完成后在次执行cmake

- 配置文件

vim /usr/local/mysql/mysql-m/etc/my.cnf1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23[mysql]

socket=/usr/local/mysql/mysql-m/mysql-m.sock

[mysqld]

user=mysql

port=3307

basedir=/usr/local/mysql/mysql-m

datadir=/usr/local/mysql/mysql-m/database-m

socket=/usr/local/mysql/mysql-m/mysql-m.sock

pid-file=/usr/local/mysql/mysql-m/mysql-m.pid

[mysqld_safe]

log-error=/usr/local/mysql/mysql-m/log/mysql-m-error.log

cp /usr/local/mysql/mysql-m/support-files/mysql.server /etc/init.d/mysql-m

vim /etc/init.d/mysql-m 修改conf为conf=/usr/local/mysql/mysql-m/etc/my.cnf

数据库安装

/usr/local/mysql/mysql-m/scripts/mysql_install_db –basedir=/usr/local/mysql/mysql-m –datadir=/usr/local/mysql/mysql-m/database-m –user=mysql

启动服务

/usr/local/mysql/mysql-m/bin/mysqld_safe &

查看mysql进程 ps -ef |grep mysql

进入mysql, 直接输入 mysql

use mysql;

create user root;

update user set Password = PASSWORD(‘root’) where user = ‘root’;

第二种方式MySql官网下载安装

下载安装

wget http://cdn.mysql.com//Downloads/MySQL-5.7/mysql-server_5.7.16-1ubuntu16.04_amd64.deb-bundle.tar

tar -xvf mysql-server_5.7.16-1ubuntu16.04_amd64.deb-bundle.tar

dpkg -i mysql-server_5.7.16-1ubuntu16.04_amd64.deb

dpkg -i mysql-client_5.7.16-1ubuntu16.04_amd64.deb配置mysql.cnf

我的配置文件路径/etc/mysql/my.cnf, 内容:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22root@bls:/etc/mysql# cat my.cnf

#

# The MySQL database server configuration file.

#

# You can copy this to one of:

# - "/etc/mysql/my.cnf" to set global options,

# - "~/.my.cnf" to set user-specific options.

#

# One can use all long options that the program supports.

# Run program with --help to get a list of available options and with

# --print-defaults to see which it would actually understand and use.

#

# For explanations see

# http://dev.mysql.com/doc/mysql/en/server-system-variables.html

#

# * IMPORTANT: Additional settings that can override those from this file!

# The files must end with '.cnf', otherwise they'll be ignored.

#

!includedir /etc/mysql/conf.d/

!includedir /etc/mysql/mysql.conf.d/其实内容在conf.d文件夹下. 进入cond.d文件夹下编辑mysql.cnf

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31[mysqld]

log-bin=mysql-bin #添加这一行就ok

binlog-format=ROW #选择row模式, canal的原理是基于mysql binlog技术,所以这里一定需要开启mysql的binlog写入功能,建议配置binlog模式为row.

server_id=1 #配置mysql replaction需要定义,不能和canal的slaveId重复

# 下面是我的配置,其实上面的这些就可以了

root@bls:/etc/mysql/conf.d# cat mysql.cnf

[mysql]

# 设置mysql客户端默认字符集

default-character-set=utf8

[mysqld]

#skip_grant_tables 这个先不要,这是忽略权限。

#设置3306端口

port = 3306

# 允许最大连接数

max_connections=200

# 服务端使用的字符集默认为8比特编码的latin1字符集

character-set-server=utf8

# 创建新表时将使用的默认存储引擎

default-storage-engine=INNODB

sql_mode=NO_ENGINE_SUBSTITUTION,STRICT_TRANS_TABLES

#master-slave: master配置

log-bin=mysql-bin

server-id=1

binlog_format=Row

log_bin=/var/log/mysql/mysql-bin1.logcanal的原理是模拟自己为mysql slave,所以这里一定需要做为mysql slave的相关权限.

1

2

3CREATE USER canal IDENTIFIED BY 'canal';

GRANT SELECT, REPLICATION SLAVE, REPLICATION CLIENT ON *.* TO 'canal'@'%';

FLUSH PRIVILEGES;

BinLog开启与查看/删除

查看是否开启binlog

1

show variables like 'log_bin'

查看当前正在写入的binlog文件

1

show master status

查看master上的binlog

1

show master logs

只查看第一个binlog文件的内容

1

show binlog events

查看指定binlog文件的内容

1

show binlog events in 'mysql-bin.000001'

设置binlog的过期时间

1

set global expire_log_days=1;

- 手动删除binlog

1

2reset master;//删除master的binlog

purge master logs before '2012-03-30 17:20:00'; //删除指定日期以前的日志索引中binlog日志文件

Canal 安装设置

下载安装

建议使用git下载源编译安装:1

2

3git clone git@github.com:alibaba/canal.git

cd canal;

mvn clean install -Dmaven.test.skip -Denv=release编译完成后,会在根目录下产生target/canal.deployer-$version.tar.gz

解压缩

1

2mkdir /tmp/canal

tar zxvf canal.deployer-$version.tar.gz -C /tmp/canal解压完成后,进入/tmp/canal目录,可以看到如下结构:

1

2

3

4

5

6

7

8root@bls:/tmp/canal# ll

总用量 32

drwxr-xr-x 6 root root 4096 11月 29 12:31 ./

drwxrwxrwt 17 root root 12288 11月 29 14:35 ../

drwxr-xr-x 2 root root 4096 11月 29 12:35 bin/

drwxr-xr-x 4 root root 4096 11月 29 12:31 conf/

drwxr-xr-x 2 root root 4096 11月 29 12:31 lib/

drwxrwxrwx 4 root root 4096 11月 29 12:32 logs/配置修改

vim conf/example/instance.properties , 注意根据自己的实际情况进行对应的调整1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25#################################################

## mysql serverId

canal.instance.mysql.slaveId = 1234

# position info,需要改成自己的数据库信息

canal.instance.master.address = 127.0.0.1:3306

canal.instance.master.journal.name =

canal.instance.master.position =

canal.instance.master.timestamp =

#canal.instance.standby.address =

#canal.instance.standby.journal.name =

#canal.instance.standby.position =

#canal.instance.standby.timestamp =

# username/password,需要改成自己的数据库信息

canal.instance.dbUsername = canal

canal.instance.dbPassword = canal

canal.instance.defaultDatabaseName =

canal.instance.connectionCharset = UTF-8

# table regex

canal.instance.filter.regex = .*\\..*

#################################################准备启动

1

sh bin/startup.sh

查看日志

1

2

3

4

5

6

7

8

9

10root@bls:/tmp/canal# cat logs/canal/canal.log

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=96m; support was removed in 8.0

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=256m; support was removed in 8.0

Java HotSpot(TM) 64-Bit Server VM warning: UseCMSCompactAtFullCollection is deprecated and will likely be removed in a future release.

2016-11-29 12:32:10.934 [main] INFO com.alibaba.otter.canal.deployer.CanalLauncher - ## start the canal server.

2016-11-29 12:32:11.003 [main] INFO com.alibaba.otter.canal.deployer.CanalController - ## start the canal server[192.168.220.68:11111]

2016-11-29 12:32:11.448 [main] INFO com.alibaba.otter.canal.deployer.CanalLauncher - ## the canal server is running now ......

2016-11-29 12:33:20.939 [Thread-3] INFO com.alibaba.otter.canal.deployer.CanalLauncher - ## stop the canal server

2016-11-29 12:33:20.954 [Thread-3] INFO com.alibaba.otter.canal.deployer.CanalController - ## stop the canal server[192.168.220.68:11111]

2016-11-29 12:33:20.954 [Thread-3] INFO com.alibaba.otter.canal.deployer.CanalLauncher - ## canal server is down.关闭

1

sh bin/stop.sh

Canal 使用

哥们用的是maven项目, 引入pom配置

1

2

3

4

5

6<!-- canal -->

<dependency>

<groupId>com.alibaba.otter</groupId>

<artifactId>canal.client</artifactId>

<version>1.0.12</version>

</dependency>引入代码

BinaryLogEntity.java1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147package com.schedulers.dto;

import com.alibaba.otter.canal.protocol.CanalEntry;

import java.util.List;

/**

* Created by xiehui1956(@)gmail.com on 16-11-30.

* 封装binlog

*/

public class BinaryLogEntity {

/**

* binary log 名称

*/

private String logFileName;

/**

* 记录位置

*/

private long offSet;

/**

* 数据库名

*/

private String databaseName;

/**

* 表名

*/

private String tableName;

/**

* 事件类型

*/

private CanalEntry.EventType eventType;

public static class EventType {

/**

* 更新操作

*/

public static final String UPDATE = "UPDATE";

/**

* 删除操作

*/

public static final String DELETE = "DELETE";

/**

* 插入操作

*/

public static final String INSERT = "INSERT";

}

/**

* 操作前后,只有update操作有此数据

*/

private List<FieldUpdate> beforeFieldUpdate;

/**

* 操作后,只有update操作有此数据

*/

private List<FieldUpdate> afterFieldUpdate;

/**

* 字段属性名

*/

private List<FieldUpdate> fields;

public String getLogFileName() {

return logFileName;

}

public void setLogFileName(String logFileName) {

this.logFileName = logFileName;

}

public long getOffSet() {

return offSet;

}

public void setOffSet(long offSet) {

this.offSet = offSet;

}

public String getDatabaseName() {

return databaseName;

}

public void setDatabaseName(String databaseName) {

this.databaseName = databaseName;

}

public String getTableName() {

return tableName;

}

public void setTableName(String tableName) {

this.tableName = tableName;

}

public CanalEntry.EventType getEventType() {

return eventType;

}

public void setEventType(CanalEntry.EventType eventType) {

this.eventType = eventType;

}

public List<FieldUpdate> getBeforeFieldUpdate() {

return beforeFieldUpdate;

}

public void setBeforeFieldUpdate(List<FieldUpdate> beforeFieldUpdate) {

this.beforeFieldUpdate = beforeFieldUpdate;

}

public List<FieldUpdate> getAfterFieldUpdate() {

return afterFieldUpdate;

}

public void setAfterFieldUpdate(List<FieldUpdate> afterFieldUpdate) {

this.afterFieldUpdate = afterFieldUpdate;

}

public List<FieldUpdate> getFields() {

return fields;

}

public void setFields(List<FieldUpdate> fields) {

this.fields = fields;

}

@Override

public String toString() {

return "BinaryLogEntity{" +

"logFileName='" + logFileName + '\'' +

", offSet=" + offSet +

", databaseName='" + databaseName + '\'' +

", tableName='" + tableName + '\'' +

", eventType=" + eventType +

", beforeFieldUpdate=" + beforeFieldUpdate +

", afterFieldUpdate=" + afterFieldUpdate +

", fields=" + fields +

'}';

}

}

FieldUpdate.java1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71package com.schedulers.dto;

/**

* 更新操作会带出所有字段, 字段update状态为true的字段为更新字段

*/

public class FieldUpdate {

/**

* 是否为主键

*/

private boolean isKey;

/**

* 属性名

*/

private String fieldName;

/**

* 属性值

*/

private String fieldValue;

/**

* 是否更新

*/

private boolean isUpdate;

public boolean isKey() {

return isKey;

}

public void setKey(boolean key) {

isKey = key;

}

public String getFieldName() {

return fieldName;

}

public void setFieldName(String fieldName) {

this.fieldName = fieldName;

}

public String getFieldValue() {

return fieldValue;

}

public void setFieldValue(String fieldValue) {

this.fieldValue = fieldValue;

}

public boolean isUpdate() {

return isUpdate;

}

public void setUpdate(boolean update) {

isUpdate = update;

}

@Override

public String toString() {

return "FieldUpdate{" +

"isKey=" + isKey +

", fieldName='" + fieldName + '\'' +

", fieldValue='" + fieldValue + '\'' +

", isUpdate=" + isUpdate +

'}';

}

}

CanalClientService.java1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149package com.schedulers.canal;

import com.alibaba.otter.canal.client.CanalConnector;

import com.alibaba.otter.canal.client.CanalConnectors;

import com.alibaba.otter.canal.common.utils.AddressUtils;

import com.alibaba.otter.canal.protocol.CanalEntry;

import com.alibaba.otter.canal.protocol.Message;

import com.schedulers.dto.BinaryLogEntity;

import com.schedulers.dto.FieldUpdate;

import java.net.InetSocketAddress;

import java.util.ArrayList;

import java.util.List;

import java.util.concurrent.ArrayBlockingQueue;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

/**

* Created by xiehui1956(@)gmail.com on 16-12-1.

*/

public class CanalClientService {

/**

* binlog队列

*/

private ArrayBlockingQueue<BinaryLogEntity> blockingBinaryLogQueue = null;

private static final int THREADS = 10;

/**

* 失败重试次数

*/

private static final int FAIL_REPEAT = 6;

public void init() {

blockingBinaryLogQueue = new ArrayBlockingQueue(5000);

ExecutorService executorService = Executors.newFixedThreadPool(10);

for (int i = 0; i < THREADS; i++) {

executorService.submit(new Runnable() {

@Override

public void run() {

try {

BinaryLogEntity logEntity = blockingBinaryLogQueue.take();

System.out.println(logEntity);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

});

}

}

public static void main(String args[]) {

CanalClientService clientService = new CanalClientService();

clientService.init();

clientService.run();

}

public void run() {

String hostIp = AddressUtils.getHostIp();

// 创建链接

CanalConnector connector = CanalConnectors.newSingleConnector(new InetSocketAddress(hostIp,

11111), "example", "", "");

int batchSize = 1000;

try {

connector.connect();

connector.subscribe(".*\\..*");

connector.rollback();

while (true) {

Message message = connector.getWithoutAck(batchSize); // 获取指定数量的数据

long batchId = message.getId();

int size = message.getEntries().size();

if (batchId == -1 || size == 0) {

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

}

} else {

printEntry(message.getEntries());

}

connector.ack(batchId); // 提交确认

}

} finally {

connector.disconnect();

}

}

private void printEntry(List<CanalEntry.Entry> entrys) {

for (CanalEntry.Entry entry : entrys) {

if (entry.getEntryType() == CanalEntry.EntryType.TRANSACTIONBEGIN || entry.getEntryType() == CanalEntry.EntryType.TRANSACTIONEND) {

continue;

}

CanalEntry.RowChange rowChange;

try {

rowChange = CanalEntry.RowChange.parseFrom(entry.getStoreValue());

} catch (Exception e) {

throw new RuntimeException("ERROR ## parser of eromanga-event has an error , data:" + entry.toString(),

e);

}

BinaryLogEntity binaryLog = new BinaryLogEntity();

binaryLog.setLogFileName(entry.getHeader().getLogfileName());

binaryLog.setOffSet(entry.getHeader().getLogfileOffset());

binaryLog.setDatabaseName(entry.getHeader().getSchemaName());

binaryLog.setTableName(entry.getHeader().getTableName());

binaryLog.setEventType(rowChange.getEventType());

CanalEntry.EventType eventType = rowChange.getEventType();

for (CanalEntry.RowData rowData : rowChange.getRowDatasList()) {

if (eventType == CanalEntry.EventType.DELETE) {

List<FieldUpdate> fieldUpdates = buildField(rowData.getBeforeColumnsList());

binaryLog.setFields(fieldUpdates);

} else if (eventType == CanalEntry.EventType.INSERT) {

List<FieldUpdate> fieldUpdates = buildField(rowData.getBeforeColumnsList());

binaryLog.setFields(fieldUpdates);

} else if (eventType == CanalEntry.EventType.UPDATE) {

binaryLog.setBeforeFieldUpdate(buildField(rowData.getBeforeColumnsList()));

binaryLog.setAfterFieldUpdate(buildField(rowData.getAfterColumnsList()));

}

}

int i = 0;

// 失败重试

while (!blockingBinaryLogQueue.offer(binaryLog) && i < FAIL_REPEAT) {

i++;

}

}

}

private List<FieldUpdate> buildField(List<CanalEntry.Column> columns) {

List<FieldUpdate> fieldUpdates = new ArrayList();

for (CanalEntry.Column column : columns) {

FieldUpdate fieldUpdate = new FieldUpdate();

fieldUpdate.setFieldName(column.getName());

fieldUpdate.setFieldValue(column.getValue());

fieldUpdate.setUpdate(column.getUpdated());

fieldUpdate.setKey(column.getIsKey());

fieldUpdates.add(fieldUpdate);

}

return fieldUpdates;

}

}

- 查看执行结果:

delete操作:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24mysql> desc fc_school;

+-------+--------------+------+-----+---------+----------------+

| Field | Type | Null | Key | Default | Extra |

+-------+--------------+------+-----+---------+----------------+

| id | int(11) | NO | PRI | NULL | auto_increment |

| name | varchar(128) | YES | | NULL | |

| age | int(11) | YES | | NULL | |

+-------+--------------+------+-----+---------+----------------+

3 rows in set (0.00 sec)

mysql> select * from fc_school;

+----+--------+------+

| id | name | age |

+----+--------+------+

| 1 | 张三 | 2 |

| 2 | 李四 | 2 |

| 3 | 王五 | 5 |

| 4 | 赵六 | 3 |

+----+--------+------+

4 rows in set (0.00 sec)

mysql>

mysql> delete from fc_school where id = 4;

Query OK, 1 row affected (0.08 sec)

Binlog记录:1

BinaryLogEntity{logFileName='mysql-bin1.000021', offSet=3407, databaseName='fc', tableName='fc_school', eventType=DELETE, beforeFieldUpdate=null, afterFieldUpdate=null, fields=[FieldUpdate{isKey=true, fieldName='id', fieldValue='4', isUpdate=false}, FieldUpdate{isKey=false, fieldName='name', fieldValue='赵六', isUpdate=false}, FieldUpdate{isKey=false, fieldName='age', fieldValue='3', isUpdate=false}]}

insert操作:1

2

3mysql>

mysql> insert into fc_school values (4, '赵六', 3);

Query OK, 1 row affected (0.10 sec)

Binlog记录:1

BinaryLogEntity{logFileName='mysql-bin1.000021', offSet=3679, databaseName='fc', tableName='fc_school', eventType=INSERT, beforeFieldUpdate=null, afterFieldUpdate=null, fields=[]}

update操作:1

2

3mysql> update fc_school set age = 6 where id = 3;

Query OK, 1 row affected (0.08 sec)

Rows matched: 1 Changed: 1 Warnings: 0

BinLog记录:1

BinaryLogEntity{logFileName='mysql-bin1.000021', offSet=3951, databaseName='fc', tableName='fc_school', eventType=UPDATE, beforeFieldUpdate=[FieldUpdate{isKey=true, fieldName='id', fieldValue='3', isUpdate=false}, FieldUpdate{isKey=false, fieldName='name', fieldValue='王五', isUpdate=false}, FieldUpdate{isKey=false, fieldName='age', fieldValue='5', isUpdate=false}], afterFieldUpdate=[FieldUpdate{isKey=true, fieldName='id', fieldValue='3', isUpdate=false}, FieldUpdate{isKey=false, fieldName='name', fieldValue='王五', isUpdate=false}, FieldUpdate{isKey=false, fieldName='age', fieldValue='6', isUpdate=true}], fields=null}

以上.